“The saddest aspect of life right now is that science gathers knowledge faster than society gathers wisdom.” — Isaac Asimov

Recent events have forced me to re-evaluate my relationship to the topic of Artificial Intelligence (AI).

Daniel Friedman, head of education at the International Society for the Systems Sciences (ISSS) held an online discussion last month which explored how large language models (LLMs) like ChatGPT could be useful for the organization.

Watching the session made me realize that it’s time to pull my head out of the sand. I can no longer sit on the sidelines, waiting for the “right time” to start using these technologies.

I have fears about how widespread adoption of AI systems will impact myself and society at large. Are we wise enough to handle this technology?

But I am also deeply curious about the role that systems science can play in the development of AI systems that do enable greater flourishing for humanity.

This piece will cover my personal experience with LLMs and explore why I believe systems science can help address some of my fears around AI.

My Experience with LLMs

When I first got access to ChatGPT in early December, I was enthralled.

I immediately started asking it questions about systems science, and was blown away by the experience.

It felt surreal, like the dawn of a new era. All of a sudden, I had immediate access to the types of deep and meaningful conversations about systems science that I had been craving for so long.

But this initial sense of wonder and curiosity was quickly followed by fear and disillusionment.

First came the suspicion and skepticism when I shared ChatGPT with my girlfriend and a few close friends.

“How ‘intelligent’ is this thing? What happens when I ask it about how the Palestinian/Israeli conflict could be solved? See! It can’t solve ‘real’ problems.

“Is it healthy for you to be so excited? Are you just using a machine as a placeholder for the genuine human connection you so desperately desire?

Then came warnings of caution from AI researchers. Gary Marcus wrote a blog post in late December arguing that we should not be overly impressed with ChatGPT. Marcus provided several examples demonstrating how ChatGPT “makes stuff up, regularly.”

How dependent should I be on a computer program that is highly skilled at appearing intelligent, but is incapable of causal reasoning? These LLMs are, in the words of ChatGPT, “expensive pattern-matching machines that we should call industrial statisticians instead of artificial intelligence.”

The final straw was seeing how my Twitter feed became flooded with AI noise. People were using ChatGPT to create complex software without knowing how to code, write essays for school, and devise various successful money-making schemes.

All of a sudden AI was everywhere. My inner contrarian was activated.

By early January I had concluded that we, as a society, are not collectively wise enough to use this tool in a way that is net positive. ChatGPT is clearly increasing our productivity. But towards what end? Is greater productivity a good thing for a society that in so many ways seems to be going in the wrong direction?

Did we just strap high-powered rockets onto the titanic and remove the steering wheel while the ship is headed straight for a giant iceberg?

I can count the number of times I’ve seriously engaged with ChatGPT since then on a single hand.

Enter Systems Science

“What characterizes AI doomers (as well as many pessimists) is a limitless imagination for catastrophe scenarios and a complete lack of imagination (or competence) for ways to avoid them.” — Yann LeCun

Advancing the state of systems science, and increasing the number of systems scientists in the world can help us create AI systems that serve humanity instead of crippling us.

This is a strong intuition that I hold. It is a hunch that I’d like to develop into a testable hypothesis.

I lack the knowledge or experience to prove that my intuition is correct, but I have seen several examples which suggest that systems science can help provide some of the essential ingredients needed for the wise integration of AI into our society.

Concretely, a society that encourages education in systems science will have greater systems literacy, better systems engineering, and more effective interdisciplinary research.

Systems Literacy

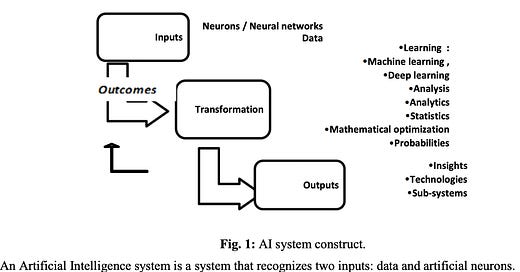

Systems literacy, a basic capacity to reason about the world in terms of systems, can help people understand the capabilities and limits of AI. Taking a systems approach to the concept of AI helps us see how the different parts of artificially intelligent systems fit together to form a coherent whole.

Systems like ChatGPT take inputs in the form of data (all publicly available information on the internet), and artificial neural networks. They use processes such as machine learning and statistics to handle the transformation of this data. Transformed data turns into outputs which include insights and technologies. Outputs might include summaries of research papers, or lines of code that can be used to build computer programs.

Finally, a feedback process sends information from the outputs back to the original inputs. This triggers adjustments in the system’s behavior. The system learns and becomes increasingly “intelligent” as more people interact with it and feed it data.

AI systems can be easily understood as processes, just like the human brain and political system that I examined when introducing principle #2 of systems science.

By increasing systems literacy we can ensure that more people, regardless of their area of expertise, have the conceptual frameworks and language needed to quickly develop a basic understanding of how LLMs work. This knowledge can empower us to use LLMs as tools, rather than becoming tools used by them.

Systems Engineering

Tyler Cody is a systems engineer who specializes in machine learning and artificial intelligence. Cody found that by using systems theory as a conceptual framework, he was able to improve the performance of a learning algorithm designed to assess the health of hydraulic actuators. These are devices that help power widely used essential machinery, from cranes and bridges to missile launchers and rocket engines.

The standard approach to maintaining these actuators is to service them at regularly timed intervals. By using learning algorithms that monitor their conditions, we can service them only when needed. This leads to reduced costs and improved utility.

Traditional machine learning approaches can assess the health of an actuator with 77% accuracy. By adding systems knowledge to the machine learning algorithm, Cody increased its performance to 88%, a significant gain considering the consequences of inaccuracy. Based on this experience, he concluded that systems theory can help us develop “principled methodologies for AI systems engineering that are firmly rooted in mathematical principles.”

The artificial neural networks that power LLMs are “black boxes,” we don’t really know why they make the decisions they do. OpenAI recently admitted, we do not understand how they work. Their approach to solving this problem is to use LLMs as a tool for understanding LLMs, using the black box to study its self.

In contrast, being able to ground machine learning algorithms in well-defined systems principles could lead to greater efficiency and the development of systems whose behavior we can more easily understand.

Interdisciplinary Research

George Mobus is the researcher who inspired my passion for systems science. His career has encompassed a diverse range of activities: maintaining nuclear submarines in the Navy, managing an electronics design and manufacturing company, and teaching computer science to graduate students.

George received a patent in 1994 for his work on artificial neurons that mimic neurons in the brain much more realistically than the types of neural networks that power modern LLMs. He authored numerous papers focused on biologically inspired models of neural processing which were implemented in robots.

He credits his success to the education he got in systems sciences while doing his MBA. The formal methodologies for applied systems science that he outlines in his most recent textbook are the result of decades of lived experience.

George sees systems science as being essential for fostering interdisciplinary collaboration. This view is informed by his experience sitting in on meetings for the human genome project, where he saw how an orientation towards systemic thinking helped computer scientists, chemists, and biologists effectively collaborate across disciplinary boundaries.

It is essential that a large variety of people from various disciplines develop the capacity to effectively engage in the process of studying and deploying AI. Systems science can provide conceptual frameworks, common language, and methods that allow psychologists, economists, and policymakers across the globe to actively collaborate on interdisciplinary AI research. This can help ensure the technology is stewarded holistically in a wise way that serves all of humanity.

Looking Ahead

“Once, men turned their thinking over to machines in the hope that this would set them free. But that only permitted other men with machines to enslave them.”— Frank Herbert, Dune (1965)

I’m not afraid of super-intelligent AI emerging, developing consciousness, and trying to destroy humanity. I am afraid of stumbling into a world where OpenAI or one of its competitors is the mega corporation controlling the AI systems that all of humanity depends on.

But I can imagine a different future. One where there is an abundance of open-source AIs flourishing. Where a wise, systems literate population uses AI as a tool to diagnose disease, produce food, manufacture abundant housing, and design healthier economic and governance systems. Perhaps these AIs will be embedded with causal reasoning, grounded in systems principles, and only use LLMs as subsystems for narrow tasks.

To paraphrase Daniel’s thoughts from the ISSS meeting in response to fears about using LLMs, “conscientious individuals shouldn’t sideline themselves so others take the wheel, rather there is a way we can make the right difference as systems scientists.”

As I was writing this piece, a co-worker was raving about ChatGPT-4. I decided to use it for two specific tasks while writing. It was very useful in helping me clarify a few specific sentences in Tyler Cody’s paper. I was less impressed by its “thoughts” (in the image below) on systems science and AI, although the results were interesting.

I don’t know how my relationship with AI will unfold, but I’m glad that my head is no longer stuck in the sand.

Great post, thank you!

Great post Shingai.